Recently we ran into a bug in our software. We couldn’t store long titles because our database columns are constrained to 180 characters. Until then we hadn’t anticipated on, nor entered titles this long.

To store the records in the database again, we truncated the product title in our application code. Which was easy enough, but the problem remained.

Time to look into it in more detail, what could be wrong?

Our Oracle database is expressing its column length in bytes. Java’s String, however, uses Unicode Standard.

This means that more complex characters like an ä have a String length of 1 in Java but need more bytes in the database, which can result in failing to store the text in the database.

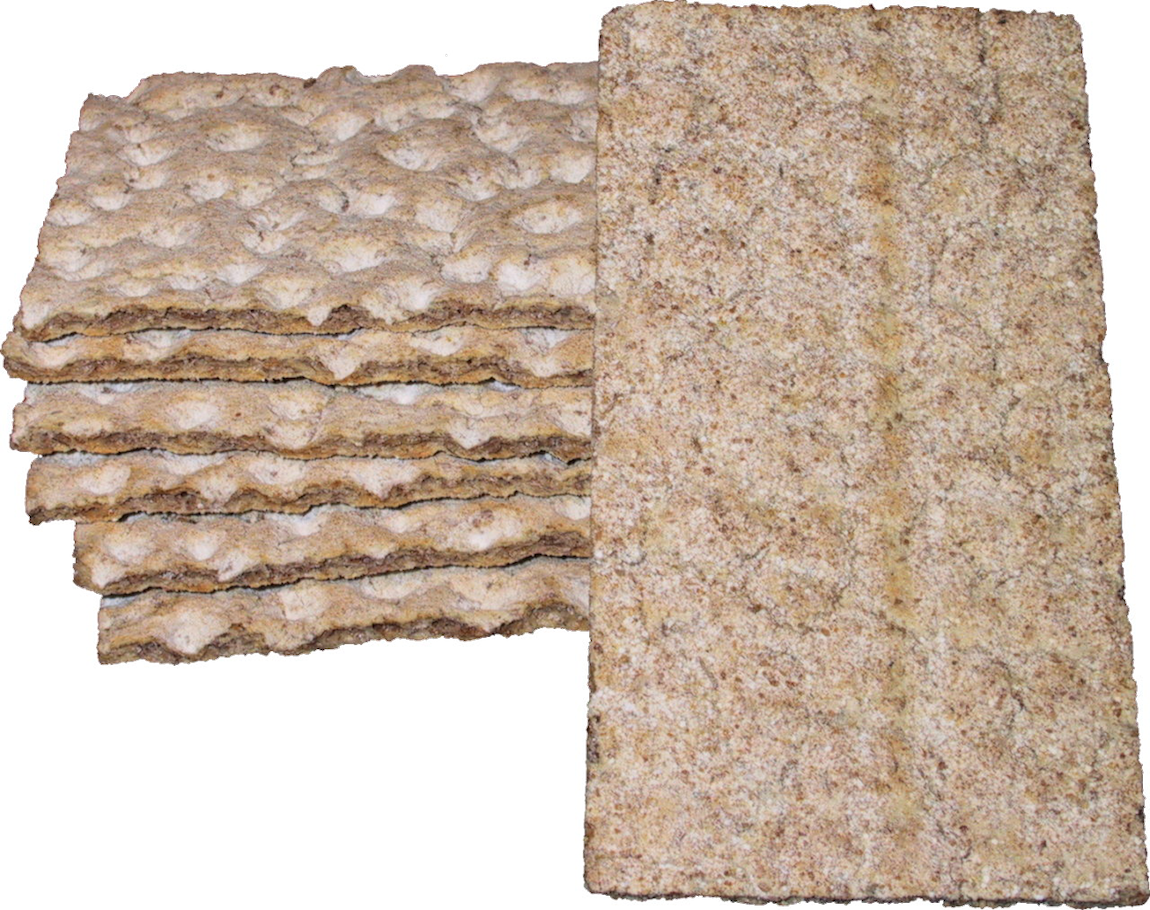

Let’s look at the word Knäckebröd. This has a String length of 10. And a crispness of about 12.

Photo by MatthiasKabel at the German language Wikipedia, CC BY-SA 3.0

The length in bytes can be determined like this:

"Knäckebröd".getBytes(java.nio.charset.StandardCharsets.UTF_8).length

So, how to solve this in a bit more decent fashion in our code?

For that, we use the CharSetDecoder. To transform our Unicode String into the character set that is being used in the database:

CharsetDecoder decoder = Charset.forName(UTF_8).newDecoder(); decoder.onUnmappableCharacter(CodingErrorAction.IGNORE); decoder.onMalformedInput(CodingErrorAction.IGNORE);

What are the ignore configurations for? Basically for two situations:

- Malformed input: when the character sequence is not a legal Unicode sequence.

- Unmappable characters: for when a byte sequence is valid, but the result is not mappable.

We should specify in our code what we want to do in those cases. This can be specified with the CodingErrorAction. There are three options:

- IGNORE: Drop input and continue to next

- REPLACE: Replace the input by specified replacement

- REPORT: Throw a CharacterCodingException

Since there is no logical replacement and reporting makes no sense in our case, since we would want to truncate anyway, we choose to ignore any malformed or mapping errors.

This results in the following code:

private static final String UTF_8 = "UTF-8";

/**

* Truncates a string to a max length based on the bytes needed in UTF-8

* Non parsable bytes that can originate from truncating will be ignored

*

* @param stringToTruncate the input string that needs to be truncated

* @param maxLength the maximum length in bytes

* @return string with a maximum byte length of maxLength in UTF-8

*/

public static String truncateForUTF8ByteLength(String stringToTruncate, int maxLength) {

try {

byte[] inputBytes = stringToTruncate.getBytes(UTF_8);

if (inputBytes.length <= maxLength) {

return stringToTruncate;

}

ByteBuffer outputByteBuffer = createByteBuffer(inputBytes, maxLength);

return createCharsetDecoder().decode(outputByteBuffer).toString();

} catch (UnsupportedOperationException | UnsupportedEncodingException | CharacterCodingException e) {

throw new RuntimeException(e);

}

}

private static ByteBuffer createByteBuffer(byte[] inputBytes, int maxLength) {

byte[] outputBytes = new byte[maxLength];

System.arraycopy(inputBytes, 0, outputBytes, 0, maxLength);

return ByteBuffer.wrap(outputBytes);

}

private static CharsetDecoder createCharsetDecoder() {

CharsetDecoder decoder = Charset.forName(UTF_8).newDecoder();

decoder.onUnmappableCharacter(CodingErrorAction.IGNORE);

decoder.onMalformedInput(CodingErrorAction.IGNORE);

return decoder;

}

Of course, a more structural approach is to make sure lengthy input does not reach our service in te first place. But while we are working on that, for now we won’t get any database errors, and our service can continue its work.

The database parameter NLS_LENGTH_SEMANTICS controls this behaviour. By default it is set to BYTES but it could be set to CHAR.

When using BYTES (default) you set the maximum number of bytes of the column. When inserting ASCII (single byte) characters, 1 character = 1 byte. However, if you store multi-byte characters (like in the Knäckebröd example), 1 character = 2+ bytes and therefore you run into this issue.

You can change your system parameters to use NLS_LENGTH_SEMANTICS=’CHAR’. This way when you specify a varchar2(50), you will actually be able to store 50 characters in your column. In the background, the size (in bytes) of the column might be bigger.

One warning; you can change an existing database from BYTES to CHAR but only new tables will be measured in CHAR. The existing columns will remain counting in BYTES.

https://docs.oracle.com/en/database/oracle/oracle-database/12.2/refrn/NLS_LENGTH_SEMANTICS.html#GUID-221B0A5E-A17A-4CBC-8309-3A79508466F9

Hi Robert,

Thanks for your update. Sounds like a setting to keep in mind for new columns. Our current workaround should not last long we hope. (famous last words 🙂 )